Quick Read

- The world of precise device-level microtargeting as a primary lever to drive performance marketing outcomes is long behind us.

- Numerous factors have driven drastic changes in the mechanics underpinning the major digital ad platforms.

- In this new landscape, the specific way in which your campaigns interface with channel algorithms (AKA optimization signal) is the primary determinant of success.

- In this article we explore what levers advertisers have to remain in control of their growth destiny & discuss some creative tips for improving your chance of positive outcomes.

“Good” audience targeting doesn’t exist any more.

One of the most popular marketing adages is “right message, right place, right time”. The 2010s digital marketing landscape was defined by vast swaths of device-level, individual data. Advertisers had high visibility into this granular data but had to make significant effort to manually press the right buttons and micro-target the right segments. Over the past five years, this paradigm has been turned on its head.

For one, the adage relies on an outdated concept of what platforms are capable of delivering.

In the past, Meta was able to take users’ declared interests and observed behaviors to model out purchase likelihoods. Advertisers were therefore able to adopt contextual targeting with greater success.

For example, if an advertiser promoted running events in the past, they would be able to explicitly target audiences with running or related interests and make significant efforts to crunch the numbers and decide where to adjust based on where audiences were performing. Performing segments would be built into a new Lookalike to build a similar population of users.

That changed long ago.

Multiple factors caused Meta’s granular audience data to decline:

- Misuse by bad actors – such as the Cambridge Analytica’s data harvesting scandal – led Meta to impose significant audience size thresholds

- Meta deprecated third party audiences from partners like Axciom and Experian. These previously provided data like estimated household income

- People simply stopped updating their declared interests on Facebook, or stopped liking pages

- Meta and other platforms expanded sensitive categories to prevent unsavory or predatory practices in areas like Housing, Employment, Credit, Health and similar

- Privacy regulations, iOS platform changes and the rise of cookie blockers have narrowed the scope of data visible so Meta and other platforms lost access to the off-platform data that enabled them to model user behavior so closely

The result: In the past you may have been able to precisely identify niche, high-value audiences. Today those specific audiences blur into a crowd.

...for the majority of advertisers, there is no audience strategy as powerful as understanding how to leverage your own first party data effectively.

...for the majority of advertisers, there is no audience strategy as powerful as understanding how to leverage your own first party data effectively.

What about third party audiences?

Third party data sources still power audiences today on several platforms across CTV, DSPs and social. But, these sources remain very questionable based on stale data.

By and large, targetable audiences have very little meaning on most platforms, and are largely worthwhile only if you’re actively excluding people for whom the product is totally irrelevant (e.g. ED medicine for women; AARP benefits for people under 50) or those whom you have a legal obligation to exclude (e.g. children).

There do exist some extremely rare sources of exceptional product-audience fits. But, for the majority of advertisers, there is no audience strategy as powerful as understanding how to leverage your own first party data effectively.

Tuning signal quality is critical to reaching your best audience

So audiences aren’t good any more: Where do we go from here?

Today we are in an algorithmic era. Advertising platforms now overwhelmingly identify who to serve your ad to based on your optimization signal – how you are defining success in your campaigns.

These advanced algorithms do the heavy lifting of automatically processing vast aggregated and anonymized behavioral data. They will then dynamically adjust to signals in real-time and identify a shockingly select group of users they deem most likely to achieve an advertiser’s goals.

Think of it like an operator controlling a searchlight: If you give the operator a good description of what you’re looking for, they’ll know it when they see it and they’ll keep the searchlight fixed on that thing..

But, if you feed the operator a poorly drawn or misleading description, they’ll bounce around, unsure of whether they’ve ever really found anything. Or, even worse, they’ll train the beam doggedly on a false positive.

Platforms are forced to start with a small group of likely converters

Platforms can’t conceivably hit all of the screens that fit your description. They have to be selective.

Let’s take an example. You want to reach every single person in the United States. Lets assume (wishfully) that:

- You’re going to get a world-beating CPM of $5

- Every single impression will go to a real and unique user

- Every single person in the US will log into this magical channel during this timeframe

With all of this wishful thinking, it would still cost you $1.75M to serve an impression to everyone in the US.

Most advertisers aren’t spending nearly that much and yet most advertisers also broadly target the US.

So, channels start with a very limited scope and have to pretty quickly find a subset of the total targeted audience which aligns with your description of success.

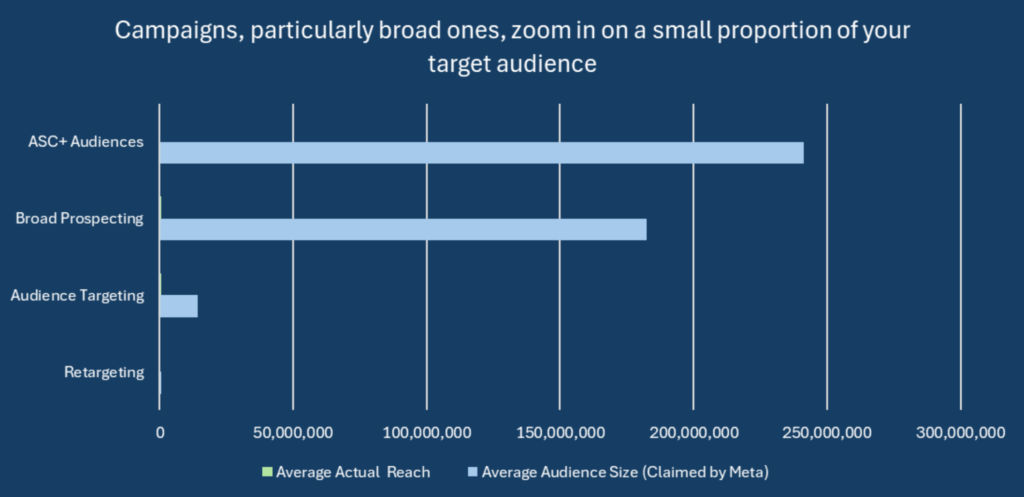

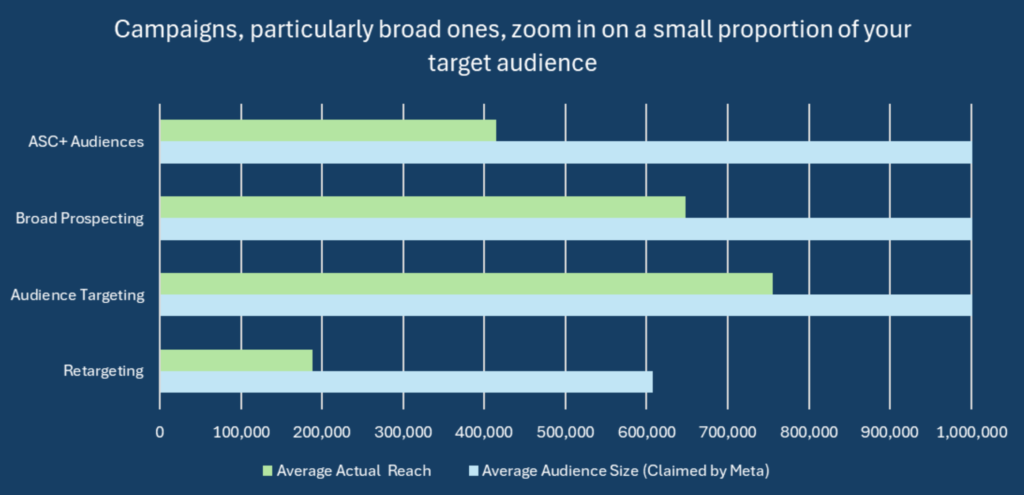

We’ve dug into average audience volume actually reached by conversion-optimized campaigns across a variety of industries. These all had sufficiently large spend (totalling around $1.5m).

We segmented them by the type of audience they were – which, to a great extent, determined the claimed audience size. The results are below:

If you have 60-20 vision or a monitor the size of your wall, you may be able to spot the average actual reach just next to the Y axis.

For those of you who don’t, let’s change the x-axis to a bare 1 million people.

The lesson is clear: Campaigns target only a very small subsection of the total available audience. ASC+ audiences, theoretically targeting the entire US, but on average hit barely more than double the reach of retargeting campaigns.

“Audience Targeting” ad sets, limited to audience segments of an average 14 million people, had a greater average reach than those targets where no audience targeting was defined whatsoever.

The key lesson: Platforms like Meta have to optimize very quickly to a very small group of people. They can’t use audiences for this, so they have to use your optimization signal. Signal is everything.

Signal defines your audience

The best way to think when choosing signals is in terms of incentives. Let’s return to that spotlight metaphor. Every time the algorithmic operator trains that spotlight on someone who matches your description, you give them a reward.

If you make your description overly broad- you’re incentivizing that spotlight operator to bring in anyone they lay eyes on. Too restrictive a description with many conditions, and the reward occurs rarely.

The algorithm does not pick up on secondary patterns or understand where else to look. You have to strike the right balance between quality and quantity of events.

The further your on-platform definition of success is from your actual business goal, the likelier you are to experience low-quality mid-funnel waste, which is now harder to trim out via targeting refinements.

The further your on-platform definition of success is from your actual business goal, the likelier you are to experience low-quality mid-funnel waste, which is now harder to trim out via targeting refinements.

Minor changes in signal can shift your entire audience

A poorly defined optimization signal opens the door to shortcuts and cheating.

If you don’t exclude those you’ve already found who match your description, you are going to risk incentivizing the algorithm to deliver without new exposure.

The ad algorithms favor the path of least resistance and will usually give you the bare minimum of what you ask for if you let it.

For example, if you ask for a LP view then you may get LP views that bounce immediately. If you ask for Form Submits that potential B2B customers and bots alike can each both complete, you’ll likely get a ton of bots who overwhelm the algorithm with noise and end up with unqualified traffic. This is akin to the lesson from children’s story of the monkey paw – as marketers you must be careful what you wish for because you may get traffic that does that alone and no more.

The further your on-platform definition of success is from your actual business goal, the likelier you are to experience low-quality mid-funnel waste, which is now harder to trim out via targeting refinements.

The solution is to be specific with what you want, and to set conscious quality thresholds:

- When you ask for ‘on platform’ events, the algorithms are very good at knowing who takes those on-platform events, but who are likely to not overlap with a high value audience (who are inherently more competitive and expensive to reach)

- Align your signal as closely to real business value / bottom-line objective as possible while maintaining sufficient throughput to learn + test – Meta defines ‘sufficient throughput’ as roughly 50 ad-set level conversions per week

Let’s put these into action with our B2B lead gen example. Rather than sending a success signal for every form submit, let’s add a few simple hacks:

- Limit your success event to those which specifically give the answers you most want.

- Let’s also exclude all submits from “gmail.com” accounts to instead optimize to those submitting business emails.

- Finally, we’ll add dummy option (e.g. “Select an Option) as the default choice in a dropdown. Don’t send a success event for anyone who selects “Select an Option”.

Optimizing to bots is easy. Optimizing to value is hard.

Lower quality audiences are generally cheaper for platforms to find ... incorporating a sense of value to your signal reduces your chances of being served the bottom of the barrel and wasting media spend.

Lower quality audiences are generally cheaper for platforms to find ... incorporating a sense of value to your signal reduces your chances of being served the bottom of the barrel and wasting media spend.

Further refine your audience by enriching your signal

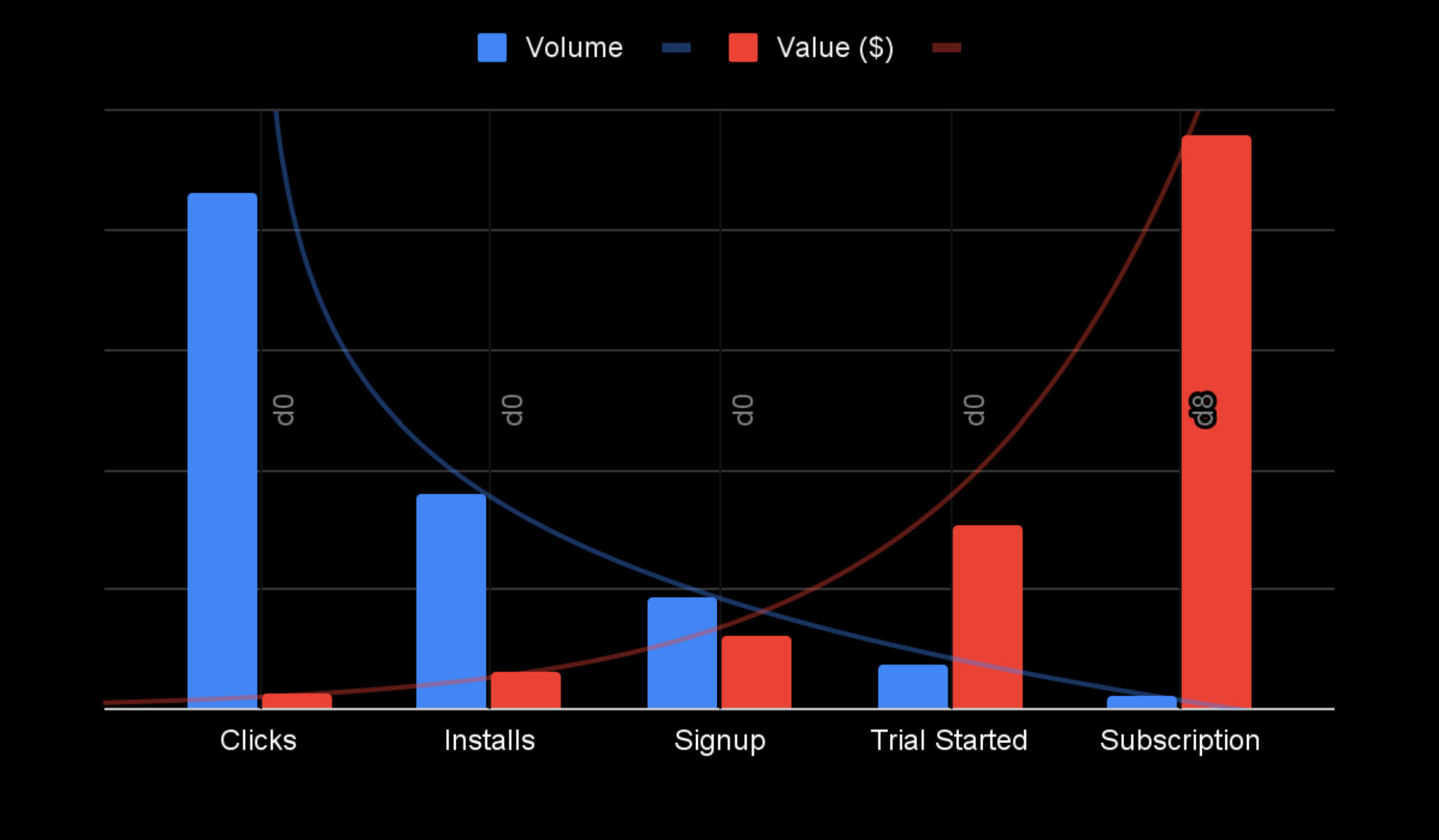

So not every converter is equal., Ultimately, we want to focus campaigns more on converters who are likely to pay you more.

The most direct way to do this would be if there’s a case where revenue is directly mapped. E-commerce is the obvious example.

But we can also add proxy values to improve the quality of our signal. Some examples might be:

- Lead Gen – Predicting rough value based on the answers to our lead-gen form. Eg. .a Sole Proprietor/SMB vs. Enterprise

- Free Trial model – Seven day free trials mean that converted subscriptions come in too late to be of use in bidding. Instead, we can create an onboarding flow to learn more about the user’s intent level. By learning what responses correlate with higher subscription rates, we can align our bidding.

- Incorporating third party data – Particularly in finance where Credit and Know Your Customer checks are so common, we can bid up to predicted high net worth individuals. Audience targeting will skew toward Leading indicators as predictive Negative signal → Trial cancellation rate, monthly plans etc.

All of these add valuable enriched data to focus in on our target audience:

- They reduce latency – All paid media platforms hate delays between click and conversion. When it takes a time to figure out whether a customer is good or not, take the time to analyze what earlier signals predict long term quality & feed those to channels.

- They reward platforms for higher quality – Lower quality audiences are generally cheaper for platforms to find (after all, there’s more of them). Adding value reduces your chances of being served the bottom of the barrel and wasting media spend.

Again, signal here is the key thing that actively defines your audience.

Finding the right event = satisfying three things:

- Quality value – users in success signal are predictive of business value

- Volume – users in success signal occur frequently enough to drive the channel & testing flexibility

- Time (Latency) – users in success signal reliably take the action within 24 hours of click

Depending on the overall size of your business, your budget, and your conversion rates & time lag through the funnel, you will be presented with options for defining your success definition. For some businesses this may be quite straightforward. For others, the tradeoffs between value & volume will be significant; testing & tinkering will help find the sweet spot that allows for sufficient channel testing (creative testing silo’s, new geographies & audiences, smaller initiatives, etc.) yet also drives high value users.

At Headlight, the diverse nature of our client portfolio has given us a breadth of expertise in solving these challenges. From premium products that cost thousands of dollars, to verticals like insurance where user value isn’t readily apparent, we are well-versed in creative approaches to fine-tuning the optimization levers to deliver outlier results. To learn more about how we could help your business, get in touch below.

Headlight’s team of experts are offering a free strategic Audit Session across Growth, Creative & Analytics – a unique opportunity for brands to learn more about how to scale spend with incrementality in mind.